Neuroscience: Watching the brain in action

In our daily lives, we interact with a vast array of objects—from pens and cups to hammers and cars. Whenever we recognize and use an object, our brain automatically accesses a wealth of background knowledge about the object’s structure, properties and functions, and about the movements associated with its use. We are also constantly observing our own actions as we engage with objects, as well as those of others. A key question is: how are these distinct types of information, which are distributed across different regions of the brain, integrated in the service of everyday behavior? Addressing this question involves specifying the internal organizational structure of the representations of each type of information, as well as the way in which information is exchanged or combined across different regions. Now, in eLife, Jody Culham at the University of Western Ontario (UWO) and co-workers report a significant advance in our understanding of these ‘big picture’ issues by showing how a specific type of information about object-directed actions is coded across the brain (Gallivan et al., 2013b).

A great deal is known about which brain regions represent and process different types of knowledge about objects and actions (Martin, 2007). For instance, visual information about the structure and form of objects, and of body parts, is represented in ventral and lateral temporal occipital regions (Goodale and Milner, 1992). Visuomotor processing in support of object-directed action, such as reaching and grasping, is represented in dorsal occipital and posterior parietal regions (Culham et al., 2003). Knowledge about how to manipulate objects according to their function is represented in inferior-lateral parietal cortex, and in premotor regions of the frontal lobe (Culham et al., 2003; Johnson-Frey, 2004).

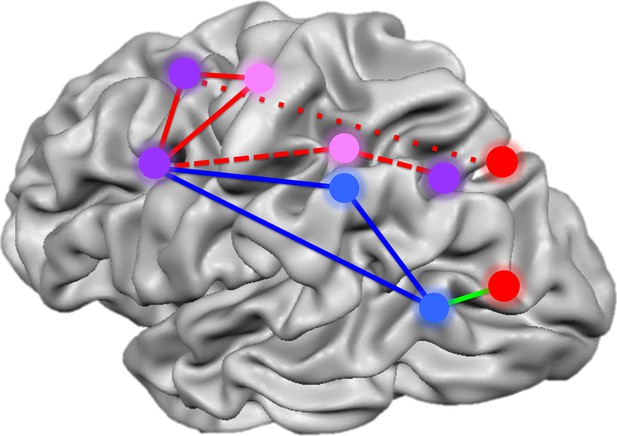

Summary of the networks of brain regions that code for movements of hands and tools.

By comparing brain activation as subjects prepared to reach towards or grasp an object using their hands or a tool, Gallivan et al. identified four networks that code for distinct components of object-directed actions. Some brain regions code for planned actions that involve the hands but not tools (red), and others for actions that involve tools but not the hands (blue). A third set of regions codes for actions involving either the hands or tools, but uses different neural representations for each (pink). A final set of areas code the type of action to be performed, distinguishing between reaching towards an object as opposed to grasping it, irrespective of whether a tool or the hands alone are used (purple). The red lines represent the frontoparietal network implicated in hand actions, with the short dashes showing the subnetwork involved in reaching, and the long dashes, the subnetwork involved in grasping. The blue solid lines show the network implicated in tool use, while the green line connects areas comprising a subset of the perception network.

FIGURE CREDIT: IMAGE ADAPTED FROM FIGURE 7 IN GALLIVAN ET AL., 2013B

Culham and colleagues—who are based at the UWO, Queen's University and the University of Missouri, and include Jason Gallivan as first author—focus their investigation on the neural substrates that underlie our ability to grasp objects. They used functional magnetic resonance imaging (fMRI) to scan the brains of subjects performing a task in which they had to alternate between using their hands or a set of pliers to reach towards or grasp an object. Ingeniously, the pliers were reverse pliers—constructed so that the business end opens when you close your fingers, and closes when your fingers open. This made it possible to dissociate the goal of each action (e.g., ‘grasp’) from the movements involved in its execution (since in the case of the pliers, ‘grasping’ is accomplished by opening the hand).

Gallivan et al. used multivariate analyses to test whether the pattern of responses elicited across a set of voxels (or points in the brain) when the participant reaches to touch an object can be distinguished from the pattern elicited across the same voxels when they grasp the object. In addition, they sought to identify three classes of brain regions: those that code grasping of objects with the hand (but not the pliers), those that code grasping of objects with the pliers (but not the hand), and those that have a common code for grasping with both the hand and the pliers (that is, a code for grasping that is independent of the specific movements involved).

One thing that makes this study particularly special is that Gallivan et al. performed their analyses on the fMRI data just ‘before’ the participants made an overt movement. In other words, they examined where in the brain the ‘intention’ to move is represented. Specifically, they asked: which brain regions distinguish between intentions corresponding to different types of object-directed actions? They found that certain regions decode upcoming actions of the hand but not the pliers (superior-parietal/occipital cortex and lateral occipital cortex), whereas other regions decode upcoming actions involving the pliers but not the hand (supramarginal gyrus and left posterior middle temporal gyrus). A third set of regions uses a common code for upcoming actions of both the hands and the pliers (subregions of the intraparietal sulcus and premotor regions of the frontal lobe).

The work of Gallivan et al. significantly advances our understanding of how the brain codes upcoming actions involving the hands. Research by a number of teams is converging to suggest that such actions activate regions of lateral occipital cortex that also respond to images of hands (Astafiev et al., 2004; Peelen and Downing, 2005; Orlov et al., 2010; Bracci et al., 2012). Moreover, a previous paper from Gallivan and colleagues reported that upcoming hand actions (grasping versus reaching with the fingers) can be decoded in regions of ventral and lateral temporal-occipital cortex that were independently defined as showing differential BOLD responses for different categories of objects (e.g., objects, scenes, body parts; Gallivan et al., 2013a). Furthermore, the regions of lateral occipital cortex that respond specifically to images of hands are directly adjacent to those that respond specifically to images of tools, and also exhibit strong functional connectivity with areas of somatomotor cortex (Bracci et al., 2012).

Taken together, these latest results and the existing literature point toward a model in which the connections between visual areas and somatomotor regions help to organize high level visual areas (Mahon and Caramazza, 2011), and to integrate visual and motor information online to support object-directed action. An exciting issue raised by this study is the degree to which tools may have multiple levels of representation across different brain regions: some regions seem to represent tools as extensions of the human body (Iriki et al., 1996), while other regions represent them as discrete objects to be acted upon by the body. The work of Gallivan et al. suggests a new way of understanding how these different representations of tools are combined in the service of everyday behavior.

References

-

Separate visual pathways for perception and actionTrends Neurosci 15:20–25.https://doi.org/10.1016/0166-2236(92)90344-8

-

The neural bases of complex tool use in humansTrends Cogn Sci 8:71–78.https://doi.org/10.1016/j.tics.2003.12.002

-

What drives the organization of object knowledge in the brain?Trends Cogn Sciences 15:97–103.https://doi.org/10.1016/j.tics.2011.01.004

-

Is the extrastriate body area involved in motor actions?125, Nat Neurosci, 8, author reply 125–6, 10.1038/nn0205-125a.

Article and author information

Author details

Publication history

- Version of Record published: May 28, 2013 (version 1)

Copyright

© 2013, Mahon

This article is distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use and redistribution provided that the original author and source are credited.

Metrics

-

- 687

- views

-

- 43

- downloads

-

- 1

- citations

Views, downloads and citations are aggregated across all versions of this paper published by eLife.

Download links

Downloads (link to download the article as PDF)

Open citations (links to open the citations from this article in various online reference manager services)

Cite this article (links to download the citations from this article in formats compatible with various reference manager tools)

Further reading

-

- Neuroscience

Cholecystokinin (CCK) is an essential modulator for neuroplasticity in sensory and emotional domains. Here, we investigated the role of CCK in motor learning using a single pellet reaching task in mice. Mice with a knockout of Cck gene (Cck−/−) or blockade of CCK-B receptor (CCKBR) showed defective motor learning ability; the success rate of retrieving reward remained at the baseline level compared to the wildtype mice with significantly increased success rate. We observed no long-term potentiation upon high-frequency stimulation in the motor cortex of Cck−/− mice, indicating a possible association between motor learning deficiency and neuroplasticity in the motor cortex. In vivo calcium imaging demonstrated that the deficiency of CCK signaling disrupted the refinement of population neuronal activity in the motor cortex during motor skill training. Anatomical tracing revealed direct projections from CCK-expressing neurons in the rhinal cortex to the motor cortex. Inactivation of the CCK neurons in the rhinal cortex that project to the motor cortex bilaterally using chemogenetic methods significantly suppressed motor learning, and intraperitoneal application of CCK4, a tetrapeptide CCK agonist, rescued the motor learning deficits of Cck−/− mice. In summary, our results suggest that CCK, which could be provided from the rhinal cortex, may surpport motor skill learning by modulating neuroplasticity in the motor cortex.

-

- Neuroscience

Probing memory of a complex visual image within a few hundred milliseconds after its disappearance reveals significantly greater fidelity of recall than if the probe is delayed by as little as a second. Classically interpreted, the former taps into a detailed but rapidly decaying visual sensory or ‘iconic’ memory (IM), while the latter relies on capacity-limited but comparatively stable visual working memory (VWM). While iconic decay and VWM capacity have been extensively studied independently, currently no single framework quantitatively accounts for the dynamics of memory fidelity over these time scales. Here, we extend a stationary neural population model of VWM with a temporal dimension, incorporating rapid sensory-driven accumulation of activity encoding each visual feature in memory, and a slower accumulation of internal error that causes memorized features to randomly drift over time. Instead of facilitating read-out from an independent sensory store, an early cue benefits recall by lifting the effective limit on VWM signal strength imposed when multiple items compete for representation, allowing memory for the cued item to be supplemented with information from the decaying sensory trace. Empirical measurements of human recall dynamics validate these predictions while excluding alternative model architectures. A key conclusion is that differences in capacity classically thought to distinguish IM and VWM are in fact contingent upon a single resource-limited WM store.