Spike frequency adaptation supports network computations on temporally dispersed information

Figures

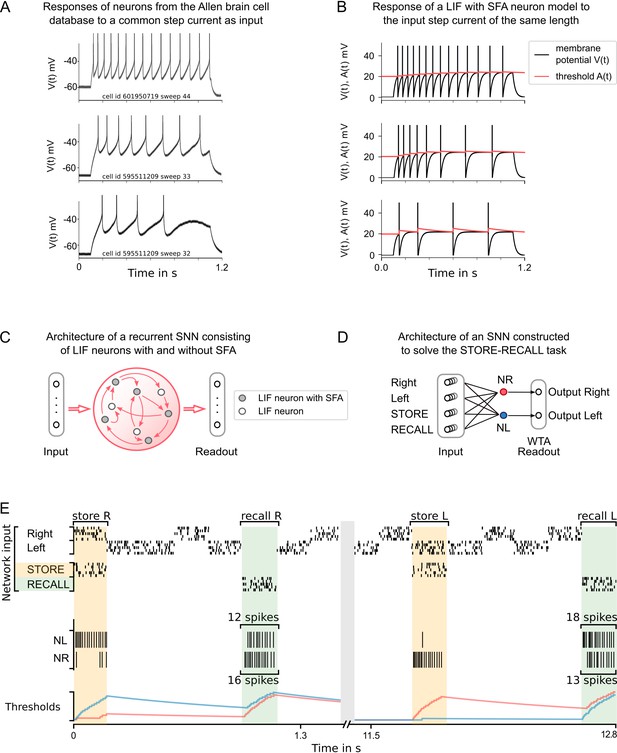

Experimental data on neurons with spike frequency adaptation (SFA) and a simple model for SFA.

(A) The response to a 1 s long step current is displayed for three sample neurons from the Allen brain cell database (Allen Institute, 2018b). The cell id and sweep number identify the exact cell recording in the Allen brain cell database. (B) The response of a simple leaky integrate-and-fire (LIF) neuron model with SFA to the 1-s-long step current. Neuron parameters used: top row , , ; middle row , , ; bottom row , , . (C) Symbolic architecture of recurrent spiking neural network (SNN) consisting of LIF neurons with and without SFA. (D) Minimal SNN architecture for solving simple instances of STORE-RECALL tasks that we used to illustrate the negative imprinting principle. It consists of four subpopulations of input neurons and two LIF neurons with SFA, labeled NR and NL, that project to two output neurons (of which the stronger firing one provides the answer). (E) Sample trial of the network from (D) for two instances of the STORE-RECALL task. The input ‘Right’ is routed to the neuron NL, which fires strongly during the first STORE signal (indicated by a yellow shading of the time segment), that causes its firing threshold (shown at the bottom in blue) to strongly increase. The subsequent RECALL signal (green shading) excites both NL and NR, but NL fires less, that is, the storing of the working memory content ‘Right’ has left a ‘negative imprint’ on its excitability. Hence, NR fires stronger during recall, thereby triggering the answer ‘Right’ in the readout. After a longer pause, which allows the firing thresholds of NR and NL to reset, a trial is shown where the value ‘Left’ is stored and recalled.

20-dimensional STORE-RECALL and sMNIST task.

(A) Sample trial of the 20-dimensional STORE-RECALL task where a trained spiking neural network (SNN) of leaky integrate-and-fire (LIF) neurons with spike frequency adaptation (SFA) correctly stores (yellow shading) and recalls (green shading) a pattern. (B, C) Test accuracy comparison of recurrent SNNs with different slow mechanisms: dual version of SFA where the threshold is decreased and causes enhanced excitability (ELIF), predominantly depressing (STP-D) and predominantly facilitating short-term plasticity (STP-F). (B) Test set accuracy of five variants of the SNN model on the one-dimensional STORE-RECALL task. Bars represent the mean accuracy of 10 runs with different network initializations. (C) Test set accuracy of the same five variants of the SNN model for the sMNIST time-series classification task. Bars represent the mean accuracy of four runs with different network initializations. Error bars in (B) and (C) indicate standard deviation.

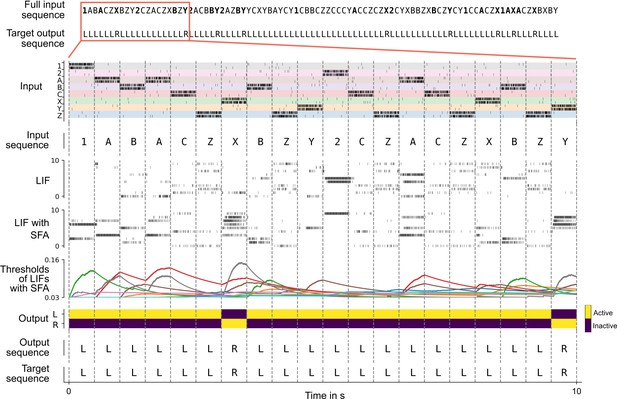

Solving the 12AX task by a network of spiking neurons with spike frequency adaptation (SFA).

A sample trial of the trained network is shown. From top to bottom: full input and target output sequence for a trial, consisting of 90 symbols each, blow-up for a subsequence – spiking input for the subsequence, the considered subsequence, firing activity of 10 sample leaky integrate-and-fire (LIF) neurons without and 10 sample LIF neurons with SFA from the network, time course of the firing thresholds of these neurons with SFA, output activation of the two readout neurons, the resulting sequence of output symbols which the network produced, and the target output sequence.

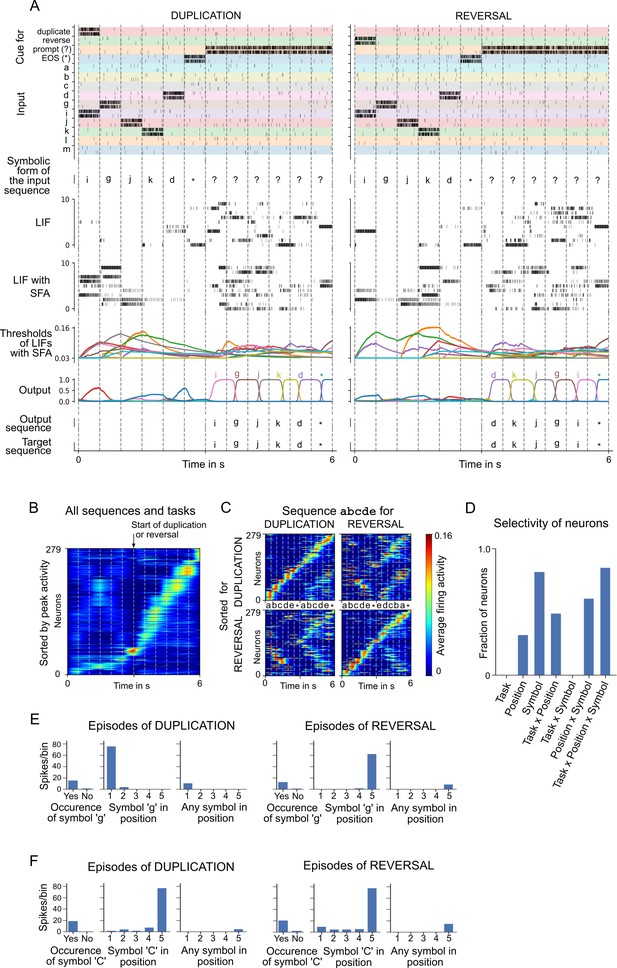

Analysis of a spiking neural network (SNN) with spike frequency adaptation (SFA) trained to carry out operations on sequences.

(A) Two sample episodes where the network carried out sequence duplication (left) and reversal (right). Top to bottom: spike inputs to the network (subset), sequence of symbols they encode, spike activity of 10 sample leaky integrate-and-fire (LIF) neurons (without and with SFA) in the SNN, firing threshold dynamics for these 10 LIF neurons with SFA, activation of linear readout neurons, output sequence produced by applying argmax to them, and target output sequence. (B–F) Emergent neural coding of 279 neurons in the SNN (after removal of neurons detected as outliers) and peri-condition time histogram (PCTH) plots of two sample neurons. Neurons are sorted by time of peak activity. (B) A substantial number of neurons were sensitive to the overall timing of the tasks, especially for the second half of trials when the output sequence is produced. (C) Neurons separately sorted for duplication episodes (top row) and reversal episodes (bottom row). Many neurons responded to input symbols according to their serial position, but differently for different tasks. (D) Histogram of neurons categorized according to conditions with statistically significant effect (three-way ANOVA). Firing activity of a sample neuron that fired primarily when (E) the symbol ‘g’ was to be written at the beginning of the output sequence. The activity of this neuron depended on the task context during the input period; (F) the symbol ‘C’ occurred in position 5 in the input, irrespective of the task context.

-

Figure 4—source data 1

Raw data to generate Figure 4DEF.

- https://cdn.elifesciences.org/articles/65459/elife-65459-fig4-data1-v1.xlsx

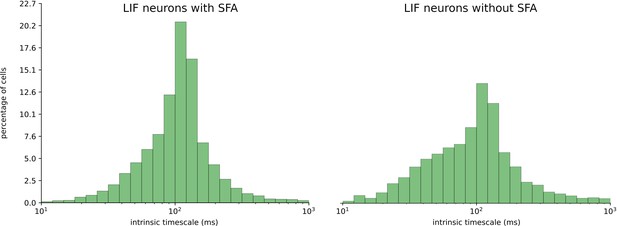

Histogram of the intrinsic time scale of neurons trained on STORE-RECALL task.

We trained 64 randomly initialized spiking neural networks (SNNs) consisting of 200 leaky integrate-and-fire (LIF) neurons with and 200 without spike frequency adaptation (SFA) on the single-feature STORE-RECALL task. Measurements of the intrinsic time scale were performed according to Wasmuht et al., 2018 on the spiking data of SNNs solving the task after training. Averaged data of all 64 runs is presented in the histogram. The distribution is very similar for neurons with and without SFA.

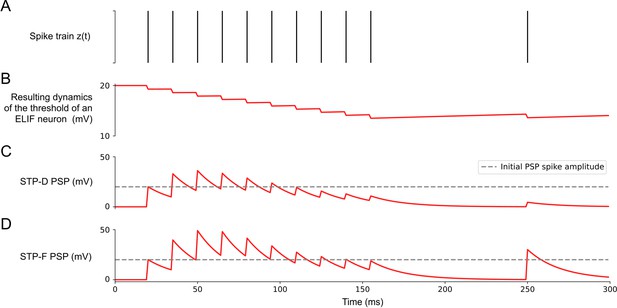

Illustration of models for an inversely adapting enhanced-excitability LIF (ELIF) neuron, and for short-term synaptic plasticity.

(A) Sample spike train. (B) The resulting evolution of firing threshold for an inversely adapting neuron (ELIF neuron). (C, D) The resulting evolution of the amplitude of postsynaptic potentials (PSPs) for spikes of the presynaptic neuron for the case of a depression-dominant (STP-D: D >> F) and a facilitation-dominant (STP-F: F >> D) short-term synaptic plasticity.

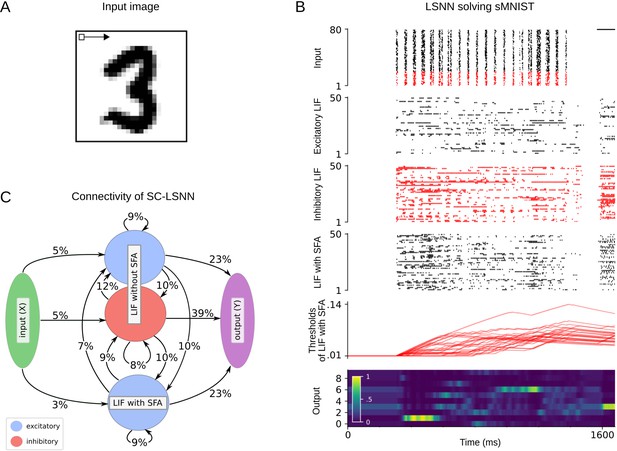

sMNIST time-series classification benchmark task.

(A) Illustration of the pixel-wise input presentation of handwritten digits for sMNIST. (B) Rows top to bottom: input encoding for an instance of the sMNIST task, network activity, and temporal evolution of firing thresholds for randomly chosen subsets of neurons in the SC-SNN, where 25% of the leaky integrate-and-fire (LIF) neurons were inhibitory (their spikes are marked in red). The light color of the readout neuron for digit ‘3’ around 1600 ms indicates that this input was correctly classified. (C) Resulting connectivity graph between neuron populations of an SC-SNN after backpropagation through time (BPTT) optimization with DEEP R on sMNIST task with 12% global connectivity limit.

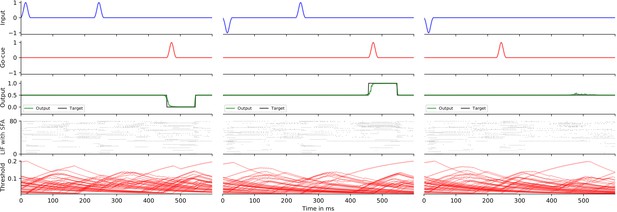

Delayed-memory XOR task.

Rows top to bottom: input signal, go-cue signal, network readout, network activity, and temporal evolution of firing thresholds.

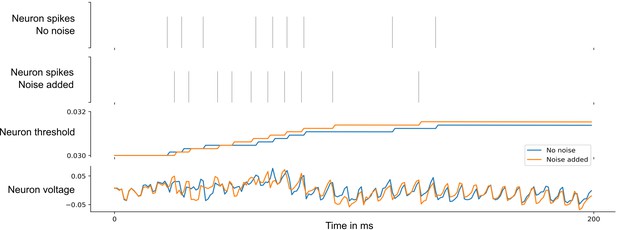

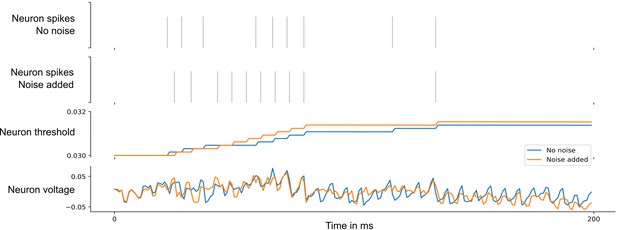

Effect of a noise current with zero mean and standard deviation 0.05 added to a single neuron in the 12AX task.

Spike train of a single neuron without noise, followed by spike train in the presence of the noise, adaptive threshold of the neuron that corresponds to the spike train with no noise (shown in blue), spike train with noise present (shown in orange), and corresponding neuron voltages over the time course of 200 ms.

Effect of a noise current with zero mean and standard deviation 0.075 added to a single neuron in the network for the 12AX task.

Spike train of a single neuron without noise, followed by spike train in the presence of the noise, adaptive threshold of the neuron that corresponds to the spike train with no noise (shown in blue), spike train with noise present (shown in orange), and corresponding neuron voltages over the time course of 200 ms.

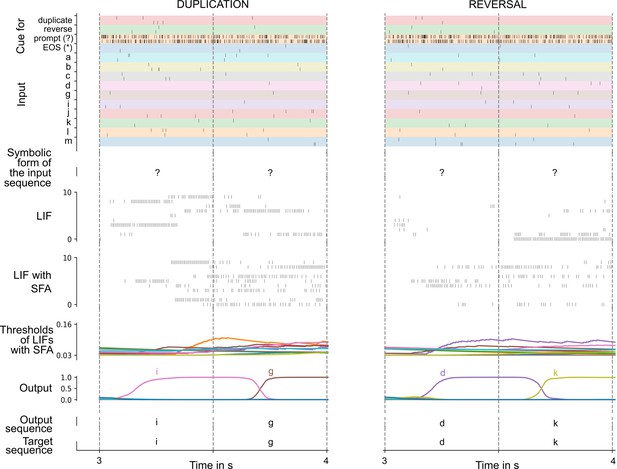

A zoom-in of the spike raster for a trial solving Duplication task (left) and Reversal task (right).

A sample episode where the network carried out sequence duplication (left) and sequence reversal (right), shown for the time period of 3–4 ms (two steps after the start of network output). Top to bottom: spike inputs to the network (subset), sequence of symbols they encode, spike activity of 10 sample leaky integrate-and-fire (LIF) neurons (without and with spike frequency adaptation [SFA]) in the spiking neural network (SNN), firing threshold dynamics for these 10 LIF neurons with SFA, activation of linear readout neurons, output sequence produced by applying argmax to them, and target output sequence.

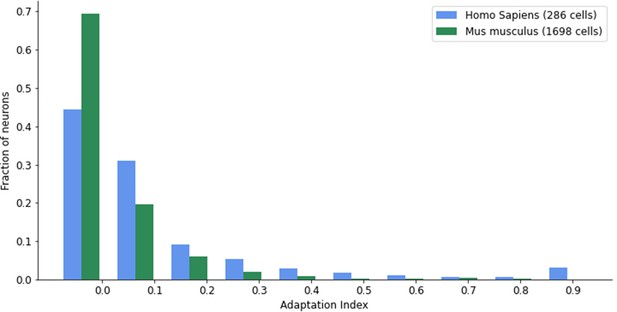

Distribution of adaptation index from Allen Institute cell measurements (Allen Institute, 2018b).

Tables

Recall accuracy (in %) of spiking neural network (SNN) models with different time constants of spike frequency adaptation (SFA) (rows) for variants of the STORE-RECALL task with different required memory time spans (columns).

Good task performance does not require good alignment of SFA time constants with the required time span for working memory. An SNN consisting of 60 leaky integrate-and-fire (LIF) neurons with SFA was trained for many different choices of SFA time constants for variations of the one-dimensional STORE-RECALL task with different required time spans for working memory. A network of 60 LIF neurons without SFA trained under the same parameters did not improve beyond chance level (~50% accuracy), except for the task instance with an expected delay of 200 ms where the LIF network reached 96.7% accuracy (see top row).

| Expected delay between STORE and RECALL | 200 ms | 2 s | 4 s | 8 s | 16 s |

|---|---|---|---|---|---|

| Without SFA ( ms) | 96.7 | 51 | 50 | 49 | 51 |

| ms | 99.92 | 73.6 | 58 | 51 | 51 |

| s | 99.0 | 99.6 | 98.8 | 92.2 | 75.2 |

| s | 99.1 | 99.7 | 99.7 | 97.8 | 90.5 |

| s | 99.6 | 99.8 | 99.7 | 97.7 | 97.1 |

| power-law dist. in [0, 8] s | 99.6 | 99.7 | 98.4 | 96.3 | 83.6 |

| uniform dist. in [0, 8] s | 96.2 | 99.9 | 98.6 | 92.1 | 92.6 |

Google Speech Commands.

Accuracy of the spiking network models on the test set compared to the state-of-the-art artificial recurrent model reported in Kusupati et al., 2018. Accuracy of the best out of five simulations for spiking neural networks (SNNs) is reported. SFA: spike frequency adaptation.

| Model | Test accuracy (%) |

|---|---|

| FastGRNN-LSQ (Kusupati et al., 2018) | 93.18 |

| SNN with SFA | 91.21 |

| SNN | 89.04 |